Parallel Python with Dask

HPC System commonly found in scientific and industry research labs.

- Apollo HPC Cluster

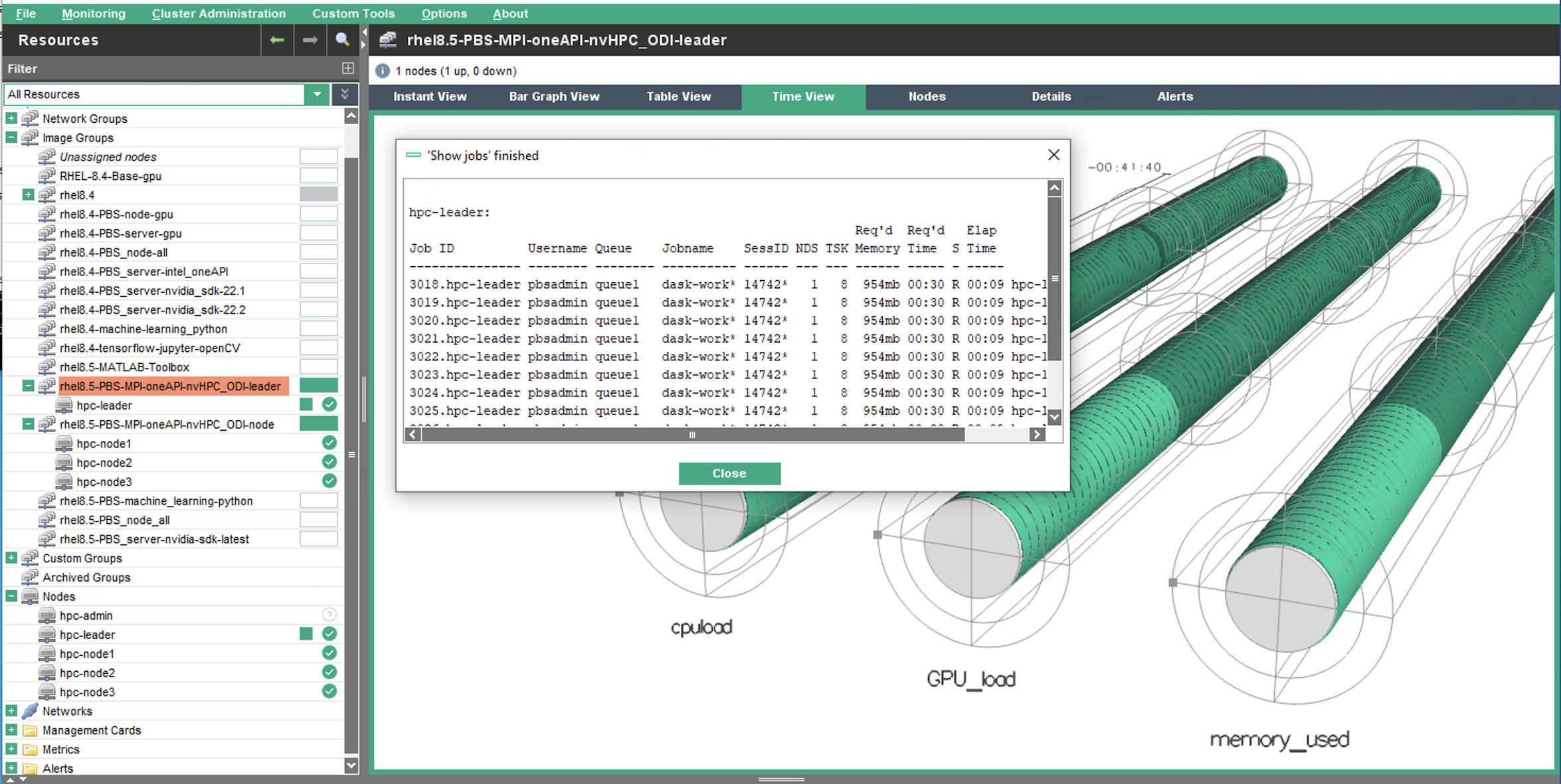

- PBS Pro workload Manager

- Python Library and Dask

# pip install dask

dask-jobqueue

The Dask-jobqueue project makes it easy to deploy Dask on common job queuing systems typically found in high performance supercomputers, academic research institutions, and other clusters.

# pip install dask_jobqueue

from dask_jobqueue import PBSCluster

cluster = PBSCluster(cores=20, memory='100GB',queue='queue1')

cluster.scale(jobs=10)

dask-mpi

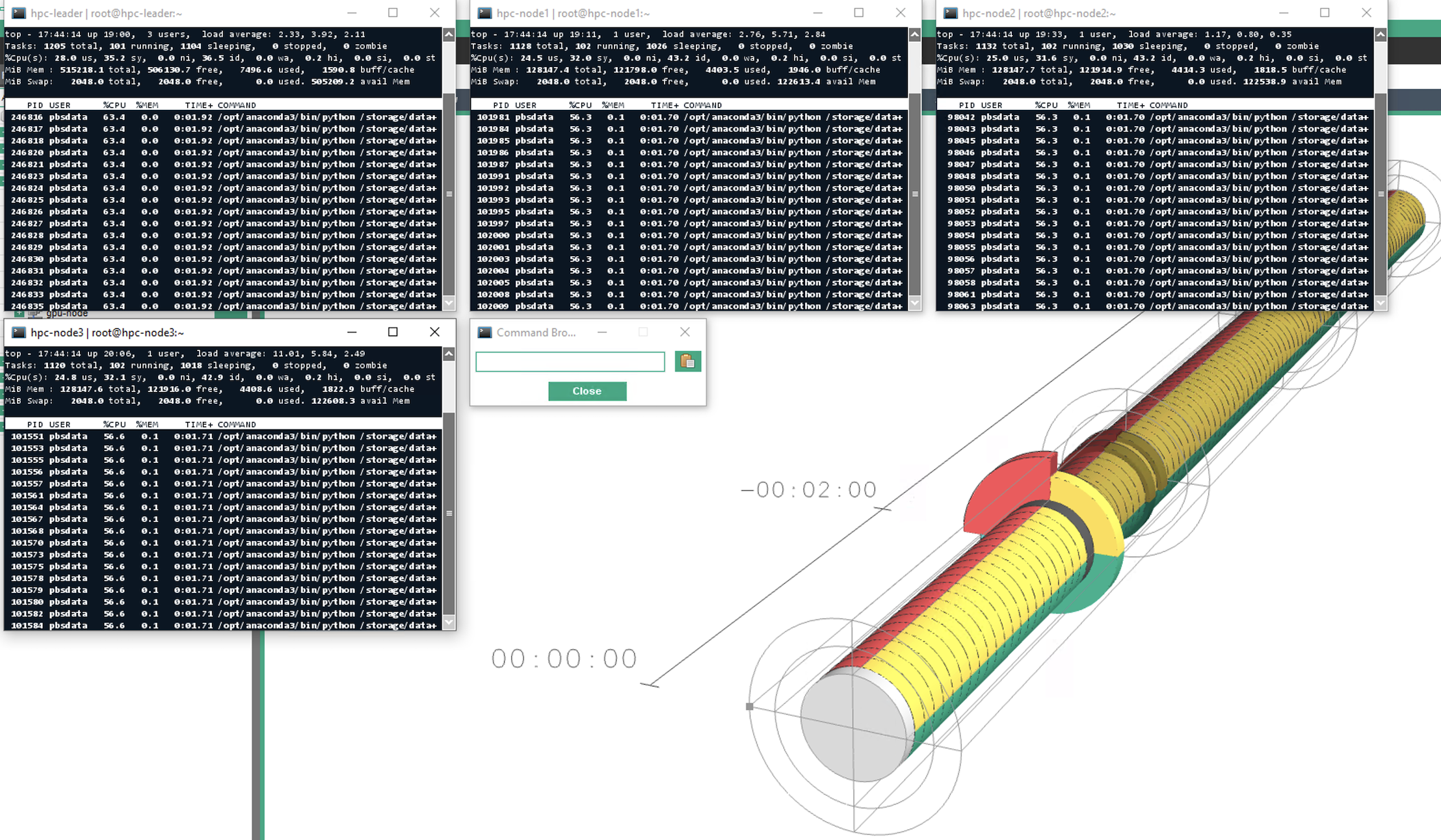

The Dask-MPI makes it easy to deploy Dask from within an existing MPI environment, such as one created with the common MPI command-line launchers mpirun or mpiexec. Such environments are commonly found in high performance supercomputers, academic research institutions, and other clusters where MPI has already been installed.

# pip install dask_mpi

Execute python script with dask-mpi library via MPI (HPE-MPI)

$ module load hmpt

$ export MPI_USE_TCP=1

$ mpiexec_mpt hpc-leader,hpc-node1,hpc-node2,hpc-node3 -np 90 /storage/data/sample/python/dask/mpi/odi_pro.py

Parallel each nodes

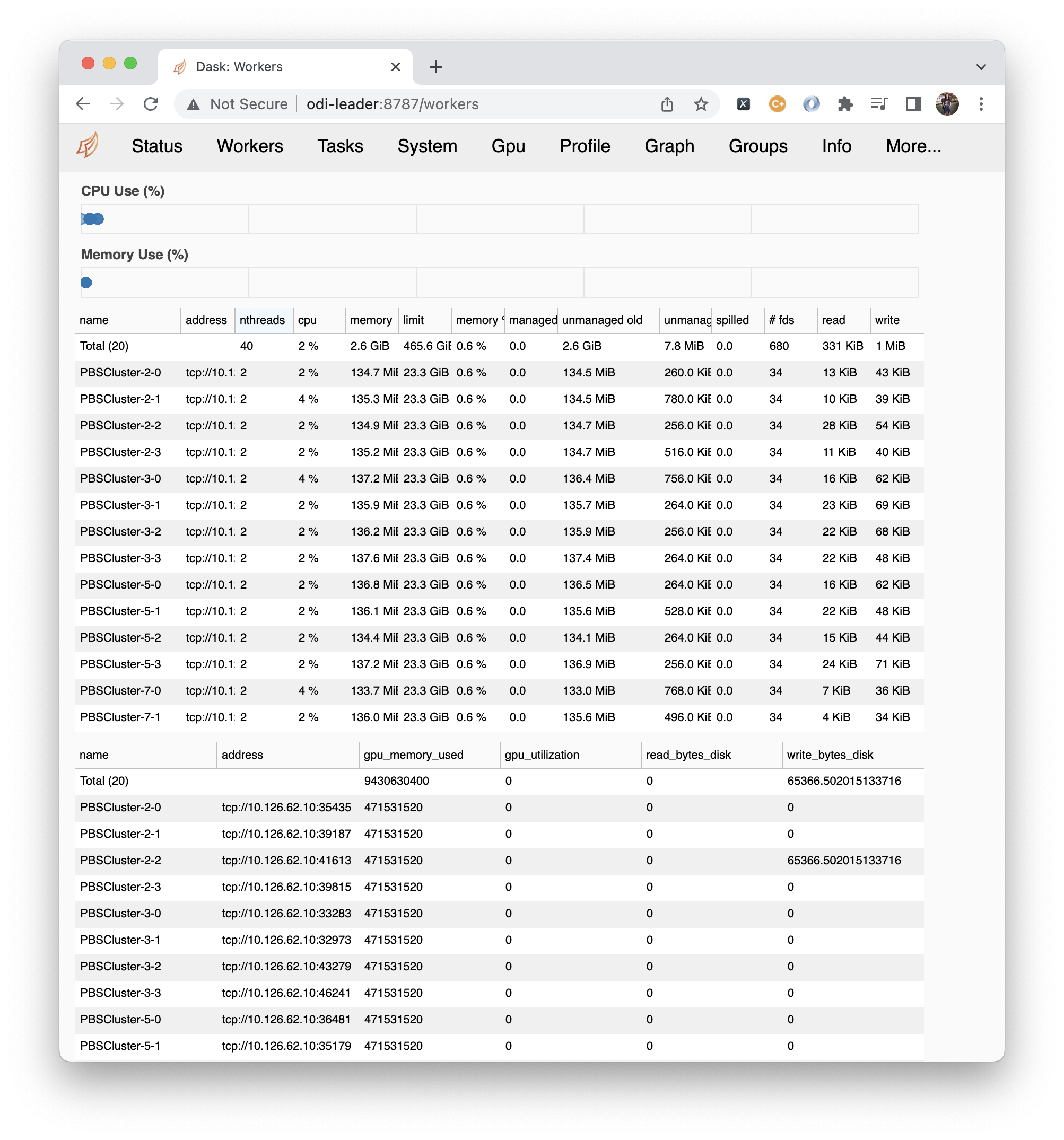

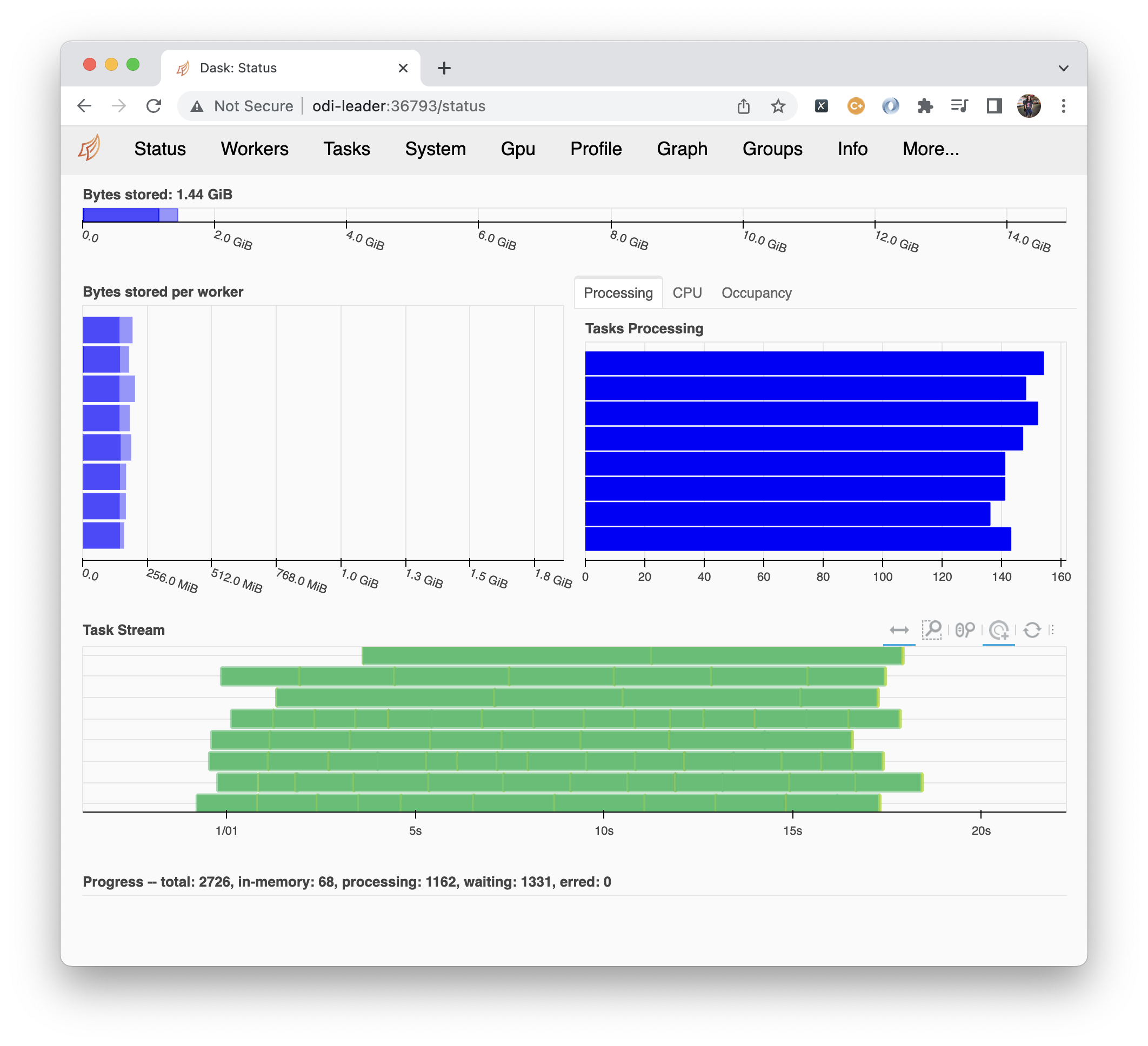

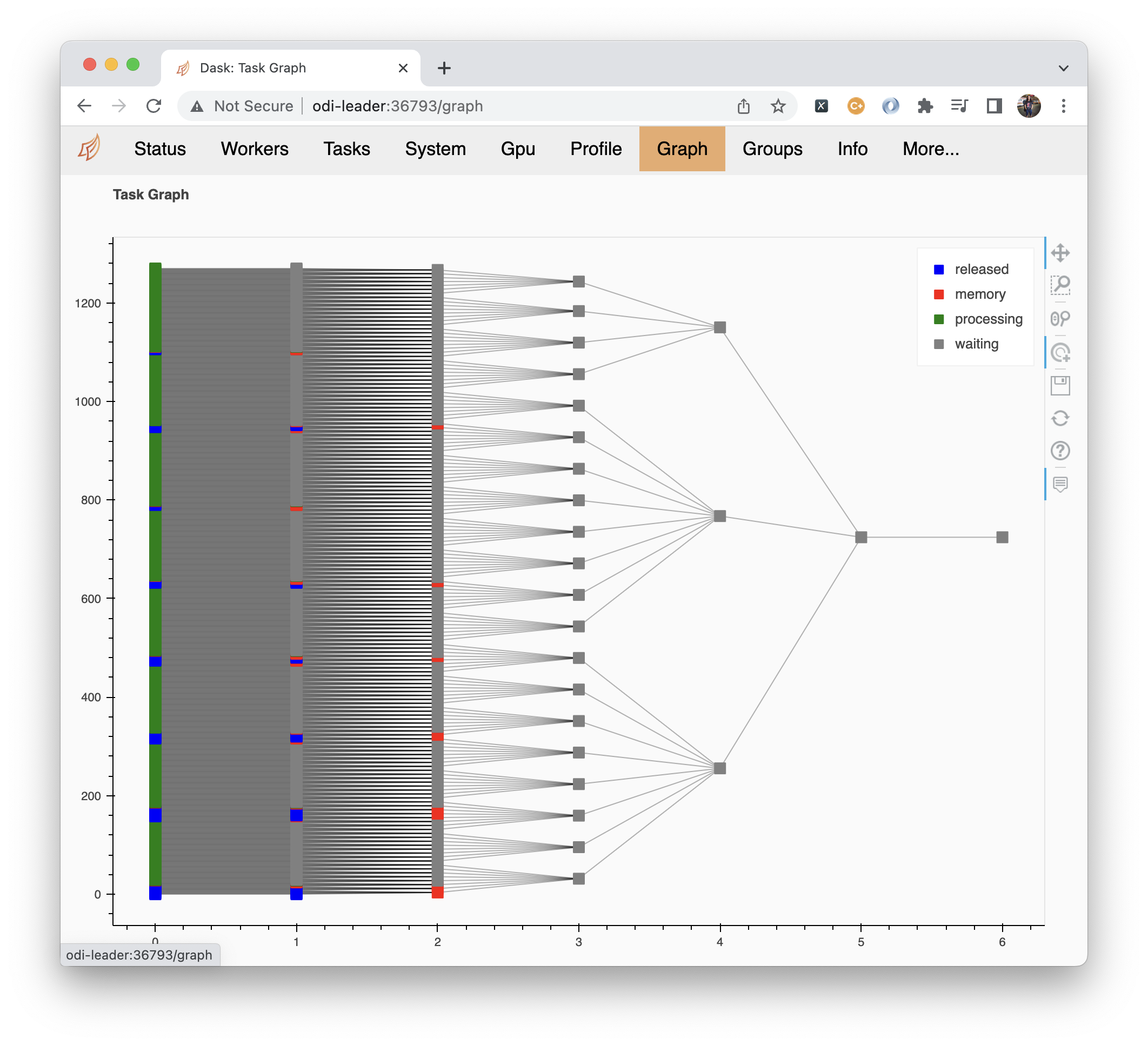

DASK dashboard

ddd bla bla bla

ddd bla bla bla ddd bla bla bla

Jacob Smith

Lehh Uga!!

Reply

Chris Meyer

nice info

Reply

Leave a comment